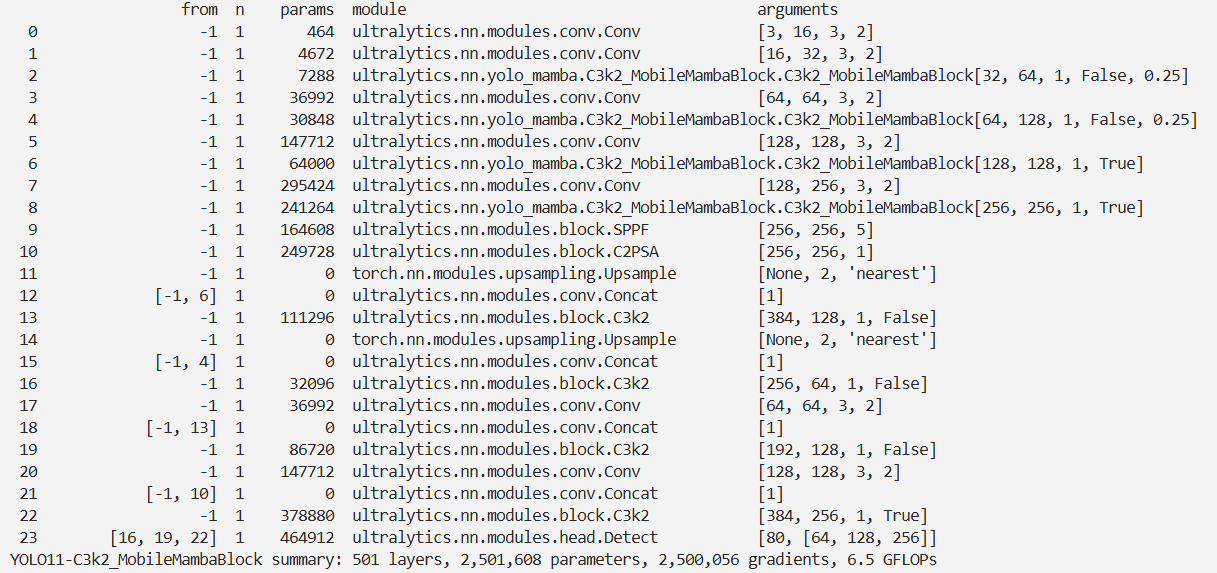

YOLOv11改进 – Mamba C3k2融合MobileMambaBlock在轻量前提下,融合全局、多尺度局部特征并保留高频细节

# 前言

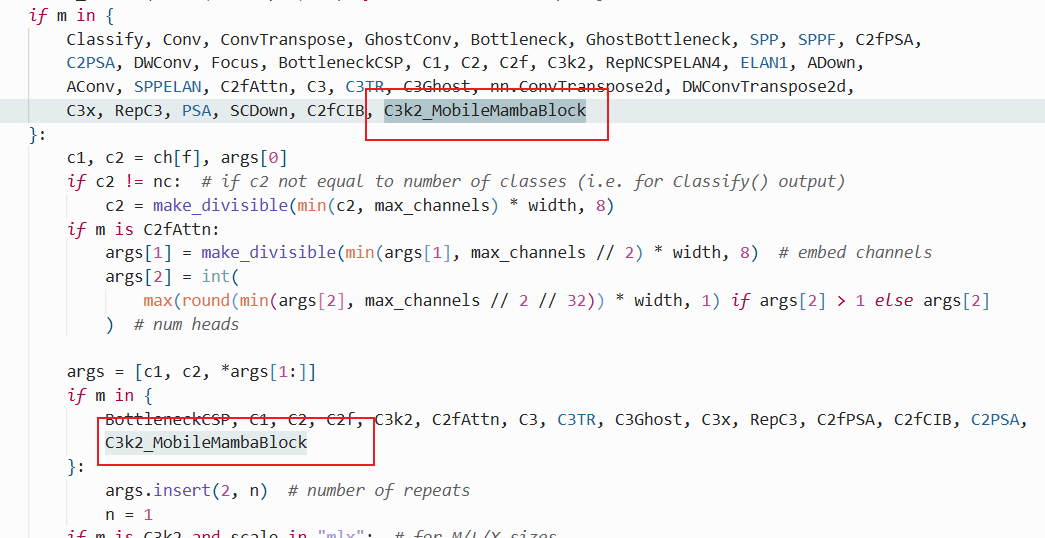

本文介绍了MobileMamba Block,其设计核心是在轻量前提下融合全局、多尺度局部特征并保留高频细节,兼顾推理效率。它是模型的核心功能单元,采用“对称局部感知 + MRFFI 核心模块 + FFN 增强”架构。MRFFI 模块是关键,将输入特征按通道拆分为三部分处理:WTE - Mamba 提取全局和高频细节,MK - DeConv 实现多尺度局部感知,Eliminate Redundant Identity 压缩冗余。我们将其引入 YOLOv11,在根目录下添加相关代码文件,并在ultralytics/nn/tasks.py中注册,还给出了配置文件和实验脚本。

文章目录: YOLOv11改进大全:卷积层、轻量化、注意力机制、损失函数、Backbone、SPPF、Neck、检测头全方位优化汇总

专栏链接: YOLOv11改进专栏

介绍

摘要

以往关于轻量级模型的研究主要集中在卷积神经网络(CNN)和基于 Transformer 的架构上。CNN 凭借其局部感受野,难以捕捉长距离依赖关系;而 Transformer 尽管具备全局建模能力,但在高分辨率场景下却受限于二次计算复杂度。最近,状态空间模型因其线性计算复杂度在视觉领域受到广泛关注。尽管当前的基于 Mamba 的轻量级模型计算量(FLOPs)较低,但其吞吐量表现并不理想。

在本文中,我们提出了 MobileMamba 框架,旨在平衡效率与性能。我们设计了一个三阶段网络,以显著提升推理速度。在细粒度层面上,我们引入了多感受野特征交互(MRFFI)模块,该模块包含长距离小波变换增强型 Mamba(WTE-Mamba)、高效多核深度卷积(MK-DeConv)以及消除冗余恒等映射组件。该模块融合了多感受野信息,并增强了高频细节的提取能力。

此外,我们还采用了特定的训练和测试策略,以进一步提高性能和效率。MobileMamba 的 Top-1 准确率高达 83.6%,超越了现有的最先进方法,并且在 GPU 上的速度比 LocalVim 最高快 21 倍。在高分辨率下游任务上进行的广泛实验表明,MobileMamba 优于当前的高效模型,实现了速度与精度的最佳平衡。

完整代码已在 https://github.com/lewandofskee/MobileMamba 开源。

文章链接

论文地址:论文地址

代码地址:代码地址

基本原理

MobileMamba Block 的设计核心是在轻量前提下,融合全局、多尺度局部特征并保留高频细节,同时兼顾推理效率,整体遵循“局部感知→多感受野交互→特征增强”的逻辑,具体设计思路如下:

1. 定位:Block 在网络中的角色

每个 MobileMamba Block 是模型的核心功能单元,串联于“三阶段网络”的各阶段中(论文 3.1 节)。其核心目标是解决传统轻量模型的痛点:CNN 局部感知局限、Transformer 计算复杂、现有 Mamba 模型 throughput 不足,实现“全局+局部”特征的高效融合。

2. 整体结构设计:对称布局+核心交互模块

Block 采用“对称局部感知 + MRFFI 核心模块 + FFN 增强”的架构(论文 3.2 节+图 4(c)):

- 两侧对称设置 局部信息感知模块:通过 3x3 深度卷积(DWConv)+ 批量归一化(BN)实现,快速捕捉局部相邻特征,为后续交互提供基础。

- 中间嵌入 多感受野特征交互(MRFFI)模块:Block 的核心创新,负责拆分并处理不同通道的特征,融合全局、多尺度局部信息。

- 两端搭配 前馈网络(FFN):通过“卷积+激活”的简单结构增强特征表达能力,同时控制计算复杂度(扩张系数设为 2,平衡性能与效率)。

- 保留 残差连接(Identity Skip):直接传递原始输入特征,缓解深层网络梯度消失问题,同时降低计算冗余(论文 3.2 节)。

3. 核心:MRFFI 模块的三分支设计

MRFFI 模块是 Block 实现“多感受野融合”的关键,将输入特征按通道维度拆分为 3 部分,分别处理后拼接输出(论文 3.2 节):

分支 1:WTE-Mamba(全局+高频细节提取)

- 功能:同时捕捉全局依赖和高频边缘细节(如物体轮廓、纹理)。

- 实现逻辑:

- 对部分通道特征(占比 ξ)用双向扫描 Mamba 模块做全局建模,学习长距离关联。

- 对同一特征图做 Haar 小波变换(WT),拆分出 1 个低频(保留核心信息)和 3 个高频(边缘细节)特征图。

- 对小波变换后的特征图做局部卷积,再通过逆小波变换(IWT) 恢复原始尺寸,最终与 Mamba 输出相加,既保留全局信息又增强细节。

分支 2:MK-DeConv(多尺度局部感知)

- 功能:通过多 kernel 卷积捕捉不同尺度的局部特征(如小物体、局部结构)。

- 实现逻辑:

- 选取部分通道特征(占比 μ),拆分为 n 组(n 为正整数)。

- 每组用不同尺寸的奇数核(k=3、5、7...)做深度卷积,对应不同感受野。

- 拼接各组卷积结果,整合多尺度局部特征,提升模型对不同大小物体的适应性。

分支 3:Eliminate Redundant Identity(冗余压缩)

- 功能:减少高维特征的通道冗余,降低计算量。

- 实现逻辑:对剩余通道(占比 1-ξ-μ)直接做恒等映射,不额外添加卷积等复杂操作,仅传递核心特征,避免无效计算。

4. 设计权衡:效率与性能的平衡

- 通道比例控制:全局(ξ)、局部(μ)、冗余压缩(1-ξ-μ)的比例固定(如 {0.8,0.7,0.6} 和 {0.2,0.2,0.3},论文表 2),确保不同模型尺度(T2~B4)的一致性。

- 简化计算:MRFFI 三分支均基于“通道拆分”而非“特征图拆分”,避免空间维度的冗余计算;MK-DeConv 默认 n=1(kernel=3),通过小波变换间接扩大感受野(ERF 从 3 翻倍至 6),兼顾简单性与效果(论文 4.4 节消融实验)。

5. 辅助优化:正则化与适配策略

- 加入 DropPath 正则化:仅在较深的 B1 模型中使用(rate=0.03),防止过拟合,浅模型(T2、T4、S6)因深度不足省略,避免性能损失(论文补充材料 A.3)。

- 支持 分辨率适配:小模型(T2、T4)用低输入分辨率(192x192)保证速度,大模型(B2、B4)提升分辨率(384x384、512x512)提升性能,Block 内部结构不变,仅调整输入维度适配(论文 4.4 节)。

安装依赖

安装pywt

pip install pywaveletsYOLO11引入代码

在根目录下的ultralytics/nn/目录,新建一个mamba目录,然后新建一个以 lib_mamba为文件名的 文件夹, 把代码拷贝进去。

然后把MobileMamba代码库中的https://github.com/lewandofskee/MobileMamba/tree/main/model/lib_mamba整个文件夹考进去

然后手动再拷贝下面的代码到对应的文件中

csm_triton.py

import torch

import warnings

WITH_TRITON = True

# WITH_TRITON = False

try:

import triton

import triton.language as tl

except:

WITH_TRITON = False

# warnings.warn("Triton not installed, fall back to pytorch implements.")

# to make sure cached_property can be loaded for triton

if WITH_TRITON:

try:

from functools import cached_property

except:

# warnings.warn("if you are using py37, add this line to functools.py: "

# "cached_property = lambda func: property(lru_cache()(func))")

pass

# torch implementation ========================================

def cross_scan_fwd(x: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=0):

if in_channel_first:

B, C, H, W = x.shape

if scans == 0:

y = x.new_empty((B, 4, C, H * W))

y[:, 0, :, :] = x.flatten(2, 3)

y[:, 1, :, :] = x.transpose(dim0=2, dim1=3).flatten(2, 3)

y[:, 2:4, :, :] = torch.flip(y[:, 0:2, :, :], dims=[-1])

elif scans == 1:

y = x.view(B, 1, C, H * W).repeat(1, 4, 1, 1)

elif scans == 2:

y = x.view(B, 1, C, H * W).repeat(1, 2, 1, 1)

y = torch.cat([y, y.flip(dims=[-1])], dim=1)

else:

B, H, W, C = x.shape

if scans == 0:

y = x.new_empty((B, H * W, 4, C))

y[:, :, 0, :] = x.flatten(1, 2)

y[:, :, 1, :] = x.transpose(dim0=1, dim1=2).flatten(1, 2)

y[:, :, 2:4, :] = torch.flip(y[:, :, 0:2, :], dims=[1])

elif scans == 1:

y = x.view(B, H * W, 1, C).repeat(1, 1, 4, 1)

elif scans == 2:

y = x.view(B, H * W, 1, C).repeat(1, 1, 2, 1)

y = torch.cat([y, y.flip(dims=[1])], dim=2)

if in_channel_first and (not out_channel_first):

y = y.permute(0, 3, 1, 2).contiguous()

elif (not in_channel_first) and out_channel_first:

y = y.permute(0, 2, 3, 1).contiguous()

return y

def cross_merge_fwd(y: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=0):

if out_channel_first:

B, K, D, H, W = y.shape

y = y.view(B, K, D, -1)

if scans == 0:

y = y[:, 0:2] + y[:, 2:4].flip(dims=[-1]).view(B, 2, D, -1)

y = y[:, 0] + y[:, 1].view(B, -1, W, H).transpose(dim0=2, dim1=3).contiguous().view(B, D, -1)

elif scans == 1:

y = y.sum(1)

elif scans == 2:

y = y[:, 0:2] + y[:, 2:4].flip(dims=[-1]).view(B, 2, D, -1)

y = y.sum(1)

else:

B, H, W, K, D = y.shape

y = y.view(B, -1, K, D)

if scans == 0:

y = y[:, :, 0:2] + y[:, :, 2:4].flip(dims=[1]).view(B, -1, 2, D)

y = y[:, :, 0] + y[:, :, 1].view(B, W, H, -1).transpose(dim0=1, dim1=2).contiguous().view(B, -1, D)

elif scans == 1:

y = y.sum(2)

elif scans == 2:

y = y[:, :, 0:2] + y[:, :, 2:4].flip(dims=[1]).view(B, -1, 2, D)

y = y.sum(2)

if in_channel_first and (not out_channel_first):

y = y.permute(0, 2, 1).contiguous()

elif (not in_channel_first) and out_channel_first:

y = y.permute(0, 2, 1).contiguous()

return y

def cross_scan1b1_fwd(x: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=0):

if in_channel_first:

B, _, C, H, W = x.shape

if scans == 0:

y = torch.stack([

x[:, 0].flatten(2, 3),

x[:, 1].transpose(dim0=2, dim1=3).flatten(2, 3),

torch.flip(x[:, 2].flatten(2, 3), dims=[-1]),

torch.flip(x[:, 3].transpose(dim0=2, dim1=3).flatten(2, 3), dims=[-1]),

], dim=1)

elif scans == 1:

y = x.flatten(2, 3)

elif scans == 2:

y = torch.stack([

x[:, 0].flatten(2, 3),

x[:, 1].flatten(2, 3),

torch.flip(x[:, 2].flatten(2, 3), dims=[-1]),

torch.flip(x[:, 3].flatten(2, 3), dims=[-1]),

], dim=1)

else:

B, H, W, _, C = x.shape

if scans == 0:

y = torch.stack([

x[:, :, :, 0].flatten(1, 2),

x[:, :, :, 1].transpose(dim0=1, dim1=2).flatten(1, 2),

torch.flip(x[:, :, :, 2].flatten(1, 2), dims=[1]),

torch.flip(x[:, :, :, 3].transpose(dim0=1, dim1=2).flatten(1, 2), dims=[1]),

], dim=2)

elif scans == 1:

y = x.flatten(1, 2)

elif scans == 2:

y = torch.stack([

x[:, 0].flatten(1, 2),

x[:, 1].flatten(1, 2),

torch.flip(x[:, 2].flatten(1, 2), dims=[-1]),

torch.flip(x[:, 3].flatten(1, 2), dims=[-1]),

], dim=2)

if in_channel_first and (not out_channel_first):

y = y.permute(0, 3, 1, 2).contiguous()

elif (not in_channel_first) and out_channel_first:

y = y.permute(0, 2, 3, 1).contiguous()

return y

def cross_merge1b1_fwd(y: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=0):

if out_channel_first:

B, K, D, H, W = y.shape

y = y.view(B, K, D, -1)

if scans == 0:

y = torch.stack([

y[:, 0],

y[:, 1].view(B, -1, W, H).transpose(dim0=2, dim1=3).flatten(2, 3),

torch.flip(y[:, 2], dims=[-1]),

torch.flip(y[:, 3].view(B, -1, W, H).transpose(dim0=2, dim1=3).flatten(2, 3), dims=[-1]),

], dim=1)

elif scans == 1:

y = y

elif scans == 2:

y = torch.stack([

y[:, 0],

y[:, 1],

torch.flip(y[:, 2], dims=[-1]),

torch.flip(y[:, 3], dims=[-1]),

], dim=1)

else:

B, H, W, _, D = y.shape

y = y.view(B, -1, K, D)

if scans == 0:

y = torch.stack([

y[:, :, 0],

y[:, :, 1].view(B, W, H, -1).transpose(dim0=1, dim1=2).flatten(1, 2),

torch.flip(y[:, :, 2], dims=[1]),

torch.flip(y[:, :, 3].view(B, W, H, -1).transpose(dim0=1, dim1=2).flatten(1, 2), dims=[1]),

], dim=2)

elif scans == 1:

y = y

elif scans == 2:

y = torch.stack([

y[:, :, 0],

y[:, :, 1],

torch.flip(y[:, :, 2], dims=[1]),

torch.flip(y[:, :, 3], dims=[1]),

], dim=2)

if out_channel_first and (not in_channel_first):

y = y.permute(0, 3, 1, 2).contiguous()

elif (not out_channel_first) and in_channel_first:

y = y.permute(0, 2, 3, 1).contiguous()

return y

class CrossScanF(torch.autograd.Function):

@staticmethod

def forward(ctx, x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=0):

# x: (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

# y: (B, 4, C, H * W) | (B, H * W, 4, C)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

if one_by_one:

B, K, C, H, W = x.shape

if not in_channel_first:

B, H, W, K, C = x.shape

else:

B, C, H, W = x.shape

if not in_channel_first:

B, H, W, C = x.shape

ctx.shape = (B, C, H, W)

_fn = cross_scan1b1_fwd if one_by_one else cross_scan_fwd

y = _fn(x, in_channel_first, out_channel_first, scans)

return y

@staticmethod

def backward(ctx, ys: torch.Tensor):

# out: (b, k, d, l)

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

ys = ys.view(B, -1, C, H, W) if out_channel_first else ys.view(B, H, W, -1, C)

_fn = cross_merge1b1_fwd if one_by_one else cross_merge_fwd

y = _fn(ys, in_channel_first, out_channel_first, scans)

if one_by_one:

y = y.view(B, 4, -1, H, W) if in_channel_first else y.view(B, H, W, 4, -1)

else:

y = y.view(B, -1, H, W) if in_channel_first else y.view(B, H, W, -1)

return y, None, None, None, None

class CrossMergeF(torch.autograd.Function):

@staticmethod

def forward(ctx, ys: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=0):

# x: (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

# y: (B, 4, C, H * W) | (B, H * W, 4, C)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

B, K, C, H, W = ys.shape

if not out_channel_first:

B, H, W, K, C = ys.shape

ctx.shape = (B, C, H, W)

_fn = cross_merge1b1_fwd if one_by_one else cross_merge_fwd

y = _fn(ys, in_channel_first, out_channel_first, scans)

return y

@staticmethod

def backward(ctx, x: torch.Tensor):

# B, D, L = x.shape

# out: (b, k, d, h, w)

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

if not one_by_one:

if in_channel_first:

x = x.view(B, C, H, W)

else:

x = x.view(B, H, W, C)

else:

if in_channel_first:

x = x.view(B, 4, C, H, W)

else:

x = x.view(B, H, W, 4, C)

_fn = cross_scan1b1_fwd if one_by_one else cross_scan_fwd

x = _fn(x, in_channel_first, out_channel_first, scans)

x = x.view(B, 4, C, H, W) if out_channel_first else x.view(B, H, W, 4, C)

return x, None, None, None, None

# triton implements ========================================

try:

@triton.jit

def triton_cross_scan_flex(

x, # (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

y, # (B, 4, C, H, W) | (B, H, W, 4, C)

x_layout: tl.constexpr,

y_layout: tl.constexpr,

operation: tl.constexpr,

onebyone: tl.constexpr,

scans: tl.constexpr,

BC: tl.constexpr,

BH: tl.constexpr,

BW: tl.constexpr,

DC: tl.constexpr,

DH: tl.constexpr,

DW: tl.constexpr,

NH: tl.constexpr,

NW: tl.constexpr,

):

# x_layout = 0

# y_layout = 1 # 0 BCHW, 1 BHWC

# operation = 0 # 0 scan, 1 merge

# onebyone = 0 # 0 false, 1 true

# scans = 0 # 0 cross scan, 1 unidirectional, 2 bidirectional

i_hw, i_c, i_b = tl.program_id(0), tl.program_id(1), tl.program_id(2)

i_h, i_w = (i_hw // NW), (i_hw % NW)

_mask_h = (i_h * BH + tl.arange(0, BH)) < DH

_mask_w = (i_w * BW + tl.arange(0, BW)) < DW

_mask_hw = _mask_h[:, None] & _mask_w[None, :]

_for_C = min(DC - i_c * BC, BC)

HWRoute0 = i_h * BH * DW + tl.arange(0, BH)[:, None] * DW + i_w * BW + tl.arange(0, BW)[None, :]

HWRoute1 = i_w * BW * DH + tl.arange(0, BW)[None, :] * DH + i_h * BH + tl.arange(0, BH)[:, None] # trans

HWRoute2 = (NH - i_h - 1) * BH * DW + (BH - 1 - tl.arange(0, BH)[:, None]) * DW + (NW - i_w - 1) * BW + (BW - 1 - tl.arange(0, BW)[None, :]) + (DH - NH * BH) * DW + (DW - NW * BW) # flip

HWRoute3 = (NW - i_w - 1) * BW * DH + (BW - 1 - tl.arange(0, BW)[None, :]) * DH + (NH - i_h - 1) * BH + (BH - 1 - tl.arange(0, BH)[:, None]) + (DH - NH * BH) + (DW - NW * BW) * DH # trans + flip

if scans == 1:

HWRoute1 = HWRoute0

HWRoute2 = HWRoute0

HWRoute3 = HWRoute0

elif scans == 2:

HWRoute1 = HWRoute0

HWRoute3 = HWRoute2

_tmp1 = DC * DH * DW

y_ptr_base = y + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if y_layout == 0 else i_c * BC)

if y_layout == 0:

p_y1 = y_ptr_base + HWRoute0

p_y2 = y_ptr_base + _tmp1 + HWRoute1

p_y3 = y_ptr_base + 2 * _tmp1 + HWRoute2

p_y4 = y_ptr_base + 3 * _tmp1 + HWRoute3

else:

p_y1 = y_ptr_base + HWRoute0 * 4 * DC

p_y2 = y_ptr_base + DC + HWRoute1 * 4 * DC

p_y3 = y_ptr_base + 2 * DC + HWRoute2 * 4 * DC

p_y4 = y_ptr_base + 3 * DC + HWRoute3 * 4 * DC

if onebyone == 0:

x_ptr_base = x + i_b * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x = x_ptr_base + HWRoute0

else:

p_x = x_ptr_base + HWRoute0 * DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_x = tl.load(p_x + _idx_x, mask=_mask_hw)

tl.store(p_y1 + _idx_y, _x, mask=_mask_hw)

tl.store(p_y2 + _idx_y, _x, mask=_mask_hw)

tl.store(p_y3 + _idx_y, _x, mask=_mask_hw)

tl.store(p_y4 + _idx_y, _x, mask=_mask_hw)

elif operation == 1:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_y1 = tl.load(p_y1 + _idx_y, mask=_mask_hw)

_y2 = tl.load(p_y2 + _idx_y, mask=_mask_hw)

_y3 = tl.load(p_y3 + _idx_y, mask=_mask_hw)

_y4 = tl.load(p_y4 + _idx_y, mask=_mask_hw)

tl.store(p_x + _idx_x, _y1 + _y2 + _y3 + _y4, mask=_mask_hw)

else:

x_ptr_base = x + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x1 = x_ptr_base + HWRoute0

p_x2 = p_x1 + _tmp1

p_x3 = p_x2 + _tmp1

p_x4 = p_x3 + _tmp1

else:

p_x1 = x_ptr_base + HWRoute0 * 4 * DC

p_x2 = p_x1 + DC

p_x3 = p_x2 + DC

p_x4 = p_x3 + DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

tl.store(p_y1 + _idx_y, tl.load(p_x1 + _idx_x, mask=_mask_hw), mask=_mask_hw)

tl.store(p_y2 + _idx_y, tl.load(p_x2 + _idx_x, mask=_mask_hw), mask=_mask_hw)

tl.store(p_y3 + _idx_y, tl.load(p_x3 + _idx_x, mask=_mask_hw), mask=_mask_hw)

tl.store(p_y4 + _idx_y, tl.load(p_x4 + _idx_x, mask=_mask_hw), mask=_mask_hw)

else:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

tl.store(p_x1 + _idx_x, tl.load(p_y1 + _idx_y), mask=_mask_hw)

tl.store(p_x2 + _idx_x, tl.load(p_y2 + _idx_y), mask=_mask_hw)

tl.store(p_x3 + _idx_x, tl.load(p_y3 + _idx_y), mask=_mask_hw)

tl.store(p_x4 + _idx_x, tl.load(p_y4 + _idx_y), mask=_mask_hw)

except:

def triton_cross_scan_flex():

pass

class CrossScanTritonF(torch.autograd.Function):

@staticmethod

def forward(ctx, x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=0):

if one_by_one:

if in_channel_first:

B, _, C, H, W = x.shape

else:

B, H, W, _, C = x.shape

else:

if in_channel_first:

B, C, H, W = x.shape

else:

B, H, W, C = x.shape

B, C, H, W = int(B), int(C), int(H), int(W)

BC, BH, BW = 1, 32, 32

NH, NW, NC = triton.cdiv(H, BH), triton.cdiv(W, BW), triton.cdiv(C, BC)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

ctx.shape = (B, C, H, W)

ctx.triton_shape = (BC, BH, BW, NC, NH, NW)

y = x.new_empty((B, 4, C, H * W)) if out_channel_first else x.new_empty((B, H * W, 4, C))

triton_cross_scan_flex[(NH * NW, NC, B)](

x.contiguous(), y,

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 0, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return y

@staticmethod

def backward(ctx, y: torch.Tensor):

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

BC, BH, BW, NC, NH, NW = ctx.triton_shape

if one_by_one:

x = y.new_empty((B, 4, C, H, W)) if in_channel_first else y.new_empty((B, H, W, 4, C))

else:

x = y.new_empty((B, C, H, W)) if in_channel_first else y.new_empty((B, H, W, C))

triton_cross_scan_flex[(NH * NW, NC, B)](

x, y.contiguous(),

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 1, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return x, None, None, None, None

class CrossMergeTritonF(torch.autograd.Function):

@staticmethod

def forward(ctx, y: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=0):

if out_channel_first:

B, _, C, H, W = y.shape

else:

B, H, W, _, C = y.shape

B, C, H, W = int(B), int(C), int(H), int(W)

BC, BH, BW = 1, 32, 32

NH, NW, NC = triton.cdiv(H, BH), triton.cdiv(W, BW), triton.cdiv(C, BC)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

ctx.shape = (B, C, H, W)

ctx.triton_shape = (BC, BH, BW, NC, NH, NW)

if one_by_one:

x = y.new_empty((B, 4, C, H * W)) if in_channel_first else y.new_empty((B, H * W, 4, C))

else:

x = y.new_empty((B, C, H * W)) if in_channel_first else y.new_empty((B, H * W, C))

triton_cross_scan_flex[(NH * NW, NC, B)](

x, y.contiguous(),

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 1, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return x

@staticmethod

def backward(ctx, x: torch.Tensor):

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

BC, BH, BW, NC, NH, NW = ctx.triton_shape

y = x.new_empty((B, 4, C, H, W)) if out_channel_first else x.new_empty((B, H, W, 4, C))

triton_cross_scan_flex[(NH * NW, NC, B)](

x.contiguous(), y,

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 0, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return y, None, None, None, None, None

# @torch.compile(options={"triton.cudagraphs": True}, fullgraph=True)

def cross_scan_fn(x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=0, force_torch=False):

# x: (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

# y: (B, 4, C, L) | (B, L, 4, C)

# scans: 0: cross scan; 1 unidirectional; 2: bidirectional;

CSF = CrossScanTritonF if WITH_TRITON and x.is_cuda and (not force_torch) else CrossScanF

return CSF.apply(x, in_channel_first, out_channel_first, one_by_one, scans)

# @torch.compile(options={"triton.cudagraphs": True}, fullgraph=True)

def cross_merge_fn(y: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=0, force_torch=False):

# y: (B, 4, C, L) | (B, L, 4, C)

# x: (B, C, H * W) | (B, H * W, C) | (B, 4, C, H * W) | (B, H * W, 4, C)

# scans: 0: cross scan; 1 unidirectional; 2: bidirectional;

CMF = CrossMergeTritonF if WITH_TRITON and y.is_cuda and (not force_torch) else CrossMergeF

return CMF.apply(y, in_channel_first, out_channel_first, one_by_one, scans)

# checks =================================================================

class CHECK:

def check_csm_triton():

B, C, H, W = 2, 192, 56, 57

dtype=torch.float16

dtype=torch.float32

x = torch.randn((B, C, H, W), dtype=dtype, device=torch.device("cuda")).requires_grad_(True)

y = torch.randn((B, 4, C, H, W), dtype=dtype, device=torch.device("cuda")).requires_grad_(True)

x1 = x.clone().detach().requires_grad_(True)

y1 = y.clone().detach().requires_grad_(True)

def cross_scan(x: torch.Tensor):

B, C, H, W = x.shape

L = H * W

xs = torch.stack([

x.view(B, C, L),

torch.transpose(x, dim0=2, dim1=3).contiguous().view(B, C, L),

torch.flip(x.contiguous().view(B, C, L), dims=[-1]),

torch.flip(torch.transpose(x, dim0=2, dim1=3).contiguous().view(B, C, L), dims=[-1]),

], dim=1).view(B, 4, C, L)

return xs

def cross_merge(out_y: torch.Tensor):

B, K, D, H, W = out_y.shape

L = H * W

out_y = out_y.view(B, K, D, L)

inv_y = torch.flip(out_y[:, 2:4], dims=[-1]).view(B, 2, -1, L)

wh_y = torch.transpose(out_y[:, 1].view(B, -1, W, H), dim0=2, dim1=3).contiguous().view(B, -1, L)

invwh_y = torch.transpose(inv_y[:, 1].view(B, -1, W, H), dim0=2, dim1=3).contiguous().view(B, -1, L)

y = out_y[:, 0] + inv_y[:, 0] + wh_y + invwh_y

return y

def cross_scan_1b1(x: torch.Tensor):

B, K, C, H, W = x.shape

L = H * W

xs = torch.stack([

x[:, 0].view(B, C, L),

torch.transpose(x[:, 1], dim0=2, dim1=3).contiguous().view(B, C, L),

torch.flip(x[:, 2].contiguous().view(B, C, L), dims=[-1]),

torch.flip(torch.transpose(x[:, 3], dim0=2, dim1=3).contiguous().view(B, C, L), dims=[-1]),

], dim=1).view(B, 4, C, L)

return xs

def unidi_scan(x):

B, C, H, W = x.shape

x = x.view(B, 1, C, H * W).repeat(1, 4, 1, 1)

return x

def unidi_merge(ys):

B, K, C, H, W = ys.shape

return ys.view(B, 4, -1, H * W).sum(1)

def bidi_scan(x):

B, C, H, W = x.shape

x = x.view(B, 1, C, H * W).repeat(1, 2, 1, 1)

x = torch.cat([x, x.flip(dims=[-1])], dim=1)

return x

def bidi_merge(ys):

B, K, D, H, W = ys.shape

ys = ys.view(B, K, D, -1)

ys = ys[:, 0:2] + ys[:, 2:4].flip(dims=[-1]).view(B, 2, D, -1)

return ys.contiguous().sum(1)

if True:

res0 = triton.testing.do_bench(lambda :cross_scan(x))

res1 = triton.testing.do_bench(lambda :cross_scan_fn(x, True, True, False))

# res2 = triton.testing.do_bench(lambda :CrossScanTriton.apply(x))

res3 = triton.testing.do_bench(lambda :cross_merge(y))

res4 = triton.testing.do_bench(lambda :cross_merge_fn(y, True, True, False))

# res5 = triton.testing.do_bench(lambda :CrossMergeTriton.apply(y))

# print(res0, res1, res2, res3, res4, res5)

print(res0, res1, res3, res4)

res0 = triton.testing.do_bench(lambda :cross_scan(x).sum().backward())

res1 = triton.testing.do_bench(lambda :cross_scan_fn(x, True, True, False).sum().backward())

# res2 = triton.testing.do_bench(lambda :CrossScanTriton.apply(x).sum().backward())

res3 = triton.testing.do_bench(lambda :cross_merge(y).sum().backward())

res4 = triton.testing.do_bench(lambda :cross_merge_fn(y, True, True, False).sum().backward())

# res5 = triton.testing.do_bench(lambda :CrossMergeTriton.apply(y).sum().backward())

# print(res0, res1, res2, res3, res4, res5)

print(res0, res1, res3, res4)

print("test cross scan")

for (cs0, cm0, cs1, cm1) in [

# channel_first -> channel_first

(cross_scan, cross_merge, cross_scan_fn, cross_merge_fn),

(unidi_scan, unidi_merge, lambda x: cross_scan_fn(x, scans=1), lambda x: cross_merge_fn(x, scans=1)),

(bidi_scan, bidi_merge, lambda x: cross_scan_fn(x, scans=2), lambda x: cross_merge_fn(x, scans=2)),

# flex: BLC->BCL; BCL->BLC; BLC->BLC;

(cross_scan, cross_merge, lambda x: cross_scan_fn(x.permute(0, 2, 3, 1), in_channel_first=False), lambda x: cross_merge_fn(x, in_channel_first=False).permute(0, 2, 1)),

(cross_scan, cross_merge, lambda x: cross_scan_fn(x, out_channel_first=False).permute(0, 2, 3, 1), lambda x: cross_merge_fn(x.permute(0, 3, 4, 1, 2), out_channel_first=False)),

(cross_scan, cross_merge, lambda x: cross_scan_fn(x.permute(0, 2, 3, 1), in_channel_first=False, out_channel_first=False).permute(0, 2, 3, 1), lambda x: cross_merge_fn(x.permute(0, 3, 4, 1, 2), in_channel_first=False, out_channel_first=False).permute(0, 2, 1)),

# previous

# (cross_scan, cross_merge, lambda x: CrossScanTriton.apply(x), lambda x: CrossMergeTriton.apply(x)),

# (unidi_scan, unidi_merge, lambda x: getCSM(1)[0].apply(x), lambda x: getCSM(1)[1].apply(x)),

# (bidi_scan, bidi_merge, lambda x: getCSM(2)[0].apply(x), lambda x: getCSM(2)[1].apply(x)),

]:

x.grad, x1.grad, y.grad, y1.grad = None, None, None, None

o0 = cs0(x)

o1 = cs1(x1)

o0.backward(y.view(B, 4, C, H * W))

o1.backward(y.view(B, 4, C, H * W))

print((o0 - o1).abs().max())

print((x.grad - x1.grad).abs().max())

o0 = cm0(y)

o1 = cm1(y1)

o0.backward(x.view(B, C, H * W))

o1.backward(x.view(B, C, H * W))

print((o0 - o1).abs().max())

print((y.grad - y1.grad).abs().max())

x.grad, x1.grad, y.grad, y1.grad = None, None, None, None

print("===============", flush=True)

print("test cross scan one by one")

for (cs0, cs1) in [

(cross_scan_1b1, lambda x: cross_scan_fn(x, one_by_one=True)),

# (cross_scan_1b1, lambda x: CrossScanTriton1b1.apply(x)),

]:

o0 = cs0(y)

o1 = cs1(y1)

o0.backward(y.view(B, 4, C, H * W))

o1.backward(y.view(B, 4, C, H * W))

print((o0 - o1).abs().max())

print((y.grad - y1.grad).abs().max())

x.grad, x1.grad, y.grad, y1.grad = None, None, None, None

print("===============", flush=True)

if __name__ == "__main__":

CHECK.check_csm_triton()

csm_tritonk2.py

import torch

import warnings

import os

WITH_TRITON = True

# WITH_TRITON = False

try:

import triton

import triton.language as tl

except:

WITH_TRITON = False

# warnings.warn("Triton not installed, fall back to pytorch implements.")

pass

# to make sure cached_property can be loaded for triton

if WITH_TRITON:

try:

from functools import cached_property

except:

# warnings.warn("if you are using py37, add this line to functools.py: "

# "cached_property = lambda func: property(lru_cache()(func))")

pass

# torch implementation ========================================

def cross_scan_fwd(x: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=2):

if in_channel_first:

B, C, H, W = x.shape

if scans == 0:

y = x.new_empty((B, 4, C, H * W))

y[:, 0, :, :] = x.flatten(2, 3)

y[:, 1, :, :] = x.transpose(dim0=2, dim1=3).flatten(2, 3)

y[:, 2:4, :, :] = torch.flip(y[:, 0:2, :, :], dims=[-1])

elif scans == 1:

y = x.view(B, 1, C, H * W).repeat(1, 2, 1, 1)

elif scans == 2:

y = x.view(B, 1, C, H * W)

y = torch.cat([y, y.flip(dims=[-1])], dim=1)

else:

B, H, W, C = x.shape

if scans == 0:

y = x.new_empty((B, H * W, 4, C))

y[:, :, 0, :] = x.flatten(1, 2)

y[:, :, 1, :] = x.transpose(dim0=1, dim1=2).flatten(1, 2)

y[:, :, 2:4, :] = torch.flip(y[:, :, 0:2, :], dims=[1])

elif scans == 1:

y = x.view(B, H * W, 1, C).repeat(1, 1, 2, 1)

elif scans == 2:

y = x.view(B, H * W, 1, C)

y = torch.cat([y, y.flip(dims=[1])], dim=2)

if in_channel_first and (not out_channel_first):

y = y.permute(0, 3, 1, 2).contiguous()

elif (not in_channel_first) and out_channel_first:

y = y.permute(0, 2, 3, 1).contiguous()

return y

def cross_merge_fwd(y: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=2):

if out_channel_first:

B, K, D, H, W = y.shape

y = y.view(B, K, D, -1)

if scans == 0:

y = y[:, 0:2] + y[:, 2:4].flip(dims=[-1]).view(B, 2, D, -1)

y = y[:, 0] + y[:, 1].view(B, -1, W, H).transpose(dim0=2, dim1=3).contiguous().view(B, D, -1)

elif scans == 1:

y = y.sum(1)

elif scans == 2:

y = y[:, 0] + y[:, 1].flip(dims=[-1]).view(B, 1, D, -1)

y = y.sum(1)

else:

B, H, W, K, D = y.shape

y = y.view(B, -1, K, D)

if scans == 0:

y = y[:, :, 0:2] + y[:, :, 2:4].flip(dims=[1]).view(B, -1, 2, D)

y = y[:, :, 0] + y[:, :, 1].view(B, W, H, -1).transpose(dim0=1, dim1=2).contiguous().view(B, -1, D)

elif scans == 1:

y = y.sum(2)

elif scans == 2:

y = y[:, :, 0] + y[:, :, 1].flip(dims=[1]).view(B, -1, 1, D)

y = y.sum(2)

if in_channel_first and (not out_channel_first):

y = y.permute(0, 2, 1).contiguous()

elif (not in_channel_first) and out_channel_first:

y = y.permute(0, 2, 1).contiguous()

return y

def cross_scan1b1_fwd(x: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=2):

if in_channel_first:

B, _, C, H, W = x.shape

if scans == 0:

y = torch.stack([

x[:, 0].flatten(2, 3),

x[:, 1].transpose(dim0=2, dim1=3).flatten(2, 3),

torch.flip(x[:, 2].flatten(2, 3), dims=[-1]),

torch.flip(x[:, 3].transpose(dim0=2, dim1=3).flatten(2, 3), dims=[-1]),

], dim=1)

elif scans == 1:

y = x.flatten(2, 3)

elif scans == 2:

y = torch.stack([

x[:, 0].flatten(2, 3),

x[:, 1].flatten(2, 3),

torch.flip(x[:, 2].flatten(2, 3), dims=[-1]),

torch.flip(x[:, 3].flatten(2, 3), dims=[-1]),

], dim=1)

else:

B, H, W, _, C = x.shape

if scans == 0:

y = torch.stack([

x[:, :, :, 0].flatten(1, 2),

x[:, :, :, 1].transpose(dim0=1, dim1=2).flatten(1, 2),

torch.flip(x[:, :, :, 2].flatten(1, 2), dims=[1]),

torch.flip(x[:, :, :, 3].transpose(dim0=1, dim1=2).flatten(1, 2), dims=[1]),

], dim=2)

elif scans == 1:

y = x.flatten(1, 2)

elif scans == 2:

y = torch.stack([

x[:, 0].flatten(1, 2),

x[:, 1].flatten(1, 2),

torch.flip(x[:, 2].flatten(1, 2), dims=[-1]),

torch.flip(x[:, 3].flatten(1, 2), dims=[-1]),

], dim=2)

if in_channel_first and (not out_channel_first):

y = y.permute(0, 3, 1, 2).contiguous()

elif (not in_channel_first) and out_channel_first:

y = y.permute(0, 2, 3, 1).contiguous()

return y

def cross_merge1b1_fwd(y: torch.Tensor, in_channel_first=True, out_channel_first=True, scans=2):

if out_channel_first:

B, K, D, H, W = y.shape

y = y.view(B, K, D, -1)

if scans == 0:

y = torch.stack([

y[:, 0],

y[:, 1].view(B, -1, W, H).transpose(dim0=2, dim1=3).flatten(2, 3),

torch.flip(y[:, 2], dims=[-1]),

torch.flip(y[:, 3].view(B, -1, W, H).transpose(dim0=2, dim1=3).flatten(2, 3), dims=[-1]),

], dim=1)

elif scans == 1:

y = y

elif scans == 2:

y = torch.stack([

y[:, 0],

y[:, 1],

torch.flip(y[:, 2], dims=[-1]),

torch.flip(y[:, 3], dims=[-1]),

], dim=1)

else:

B, H, W, _, D = y.shape

y = y.view(B, -1, 2, D)

if scans == 0:

y = torch.stack([

y[:, :, 0],

y[:, :, 1].view(B, W, H, -1).transpose(dim0=1, dim1=2).flatten(1, 2),

torch.flip(y[:, :, 2], dims=[1]),

torch.flip(y[:, :, 3].view(B, W, H, -1).transpose(dim0=1, dim1=2).flatten(1, 2), dims=[1]),

], dim=2)

elif scans == 1:

y = y

elif scans == 2:

y = torch.stack([

y[:, :, 0],

y[:, :, 1],

torch.flip(y[:, :, 2], dims=[1]),

torch.flip(y[:, :, 3], dims=[1]),

], dim=2)

if out_channel_first and (not in_channel_first):

y = y.permute(0, 3, 1, 2).contiguous()

elif (not out_channel_first) and in_channel_first:

y = y.permute(0, 2, 3, 1).contiguous()

return y

class CrossScan(torch.nn.Module):

def __init__(self, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2):

super(CrossScan, self).__init__()

self.in_channel_first = in_channel_first

self.out_channel_first = out_channel_first

self.one_by_one = one_by_one

self.scans = scans

def forward(self, x: torch.Tensor):

if self.one_by_one:

B, K, C, H, W = x.shape

if not self.in_channel_first:

B, H, W, K, C = x.shape

else:

B, C, H, W = x.shape

if not self.in_channel_first:

B, H, W, C = x.shape

self.shape = (B, C, H, W)

_fn = cross_scan1b1_fwd if self.one_by_one else cross_scan_fwd

y = _fn(x, self.in_channel_first, self.out_channel_first, self.scans)

return y

def backward(self, ys: torch.Tensor):

B, C, H, W = self.shape

ys = ys.view(B, -1, C, H, W) if self.out_channel_first else ys.view(B, H, W, -1, C)

_fn = cross_merge1b1_fwd if self.one_by_one else cross_merge_fwd

y = _fn(ys, self.in_channel_first, self.out_channel_first, self.scans)

if self.one_by_one:

y = y.view(B, 2, -1, H, W) if self.in_channel_first else y.view(B, H, W, 2, -1)

else:

y = y.view(B, -1, H, W) if self.in_channel_first else y.view(B, H, W, -1)

return y

class CrossMerge(torch.nn.Module):

def __init__(self, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2):

super(CrossMerge, self).__init__()

self.in_channel_first = in_channel_first

self.out_channel_first = out_channel_first

self.one_by_one = one_by_one

self.scans = scans

def forward(self, ys: torch.Tensor):

B, K, C, H, W = ys.shape

if not self.out_channel_first:

B, H, W, K, C = ys.shape

self.shape = (B, C, H, W)

_fn = cross_merge1b1_fwd if self.one_by_one else cross_merge_fwd

y = _fn(ys, self.in_channel_first, self.out_channel_first, self.scans)

return y

def backward(self, x: torch.Tensor):

B, C, H, W = self.shape

if not self.one_by_one:

if self.in_channel_first:

x = x.view(B, C, H, W)

else:

x = x.view(B, H, W, C)

else:

if self.in_channel_first:

x = x.view(B, 2, C, H, W)

else:

x = x.view(B, H, W, 2, C)

_fn = cross_scan1b1_fwd if self.one_by_one else cross_scan_fwd

x = _fn(x, self.in_channel_first, self.out_channel_first, self.scans)

x = x.view(B, 2, C, H, W) if self.out_channel_first else x.view(B, H, W, 2, C)

return x

class CrossScanF(torch.autograd.Function):

@staticmethod

def forward(ctx, x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2):

# x: (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 2, C)

# y: (B, 2, C, H * W) | (B, H * W, 2, C)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

if one_by_one:

B, K, C, H, W = x.shape

if not in_channel_first:

B, H, W, K, C = x.shape

else:

B, C, H, W = x.shape

if not in_channel_first:

B, H, W, C = x.shape

ctx.shape = (B, C, H, W)

_fn = cross_scan1b1_fwd if one_by_one else cross_scan_fwd

y = _fn(x, in_channel_first, out_channel_first, scans)

return y

@staticmethod

def backward(ctx, ys: torch.Tensor):

# out: (b, k, d, l)

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

ys = ys.view(B, -1, C, H, W) if out_channel_first else ys.view(B, H, W, -1, C)

_fn = cross_merge1b1_fwd if one_by_one else cross_merge_fwd

y = _fn(ys, in_channel_first, out_channel_first, scans)

if one_by_one:

y = y.view(B, 2, -1, H, W) if in_channel_first else y.view(B, H, W, 2, -1)

else:

y = y.view(B, -1, H, W) if in_channel_first else y.view(B, H, W, -1)

return y, None, None, None, None

class CrossMergeF(torch.autograd.Function):

@staticmethod

def forward(ctx, ys: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2):

# x: (B, C, H, W) | (B, H, W, C) | (B, 2, C, H, W) | (B, H, W, 2, C)

# y: (B, 2, C, H * W) | (B, H * W, 4, C)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

B, K, C, H, W = ys.shape

if not out_channel_first:

B, H, W, K, C = ys.shape

ctx.shape = (B, C, H, W)

_fn = cross_merge1b1_fwd if one_by_one else cross_merge_fwd

y = _fn(ys, in_channel_first, out_channel_first, scans)

return y

@staticmethod

def backward(ctx, x: torch.Tensor):

# B, D, L = x.shape

# out: (b, k, d, h, w)

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

if not one_by_one:

if in_channel_first:

x = x.view(B, C, H, W)

else:

x = x.view(B, H, W, C)

else:

if in_channel_first:

x = x.view(B, 2, C, H, W)

else:

x = x.view(B, H, W, 2, C)

_fn = cross_scan1b1_fwd if one_by_one else cross_scan_fwd

x = _fn(x, in_channel_first, out_channel_first, scans)

x = x.view(B, 2, C, H, W) if out_channel_first else x.view(B, H, W, 2, C)

return x, None, None, None, None

# triton implements ========================================

try:

@triton.jit

def triton_cross_scan_flex_k2(

x, # (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

y, # (B, 4, C, H, W) | (B, H, W, 4, C)

x_layout: tl.constexpr,

y_layout: tl.constexpr,

operation: tl.constexpr,

onebyone: tl.constexpr,

scans: tl.constexpr,

BC: tl.constexpr,

BH: tl.constexpr,

BW: tl.constexpr,

DC: tl.constexpr,

DH: tl.constexpr,

DW: tl.constexpr,

NH: tl.constexpr,

NW: tl.constexpr,

):

# x_layout = 0

# y_layout = 1 # 0 BCHW, 1 BHWC

# operation = 0 # 0 scan, 1 merge

# onebyone = 0 # 0 false, 1 true

# scans = 0 # 0 cross scan, 1 unidirectional, 2 bidirectional

i_hw, i_c, i_b = tl.program_id(0), tl.program_id(1), tl.program_id(2)

i_h, i_w = (i_hw // NW), (i_hw % NW)

_mask_h = (i_h * BH + tl.arange(0, BH)) < DH

_mask_w = (i_w * BW + tl.arange(0, BW)) < DW

_mask_hw = _mask_h[:, None] & _mask_w[None, :]

_for_C = min(DC - i_c * BC, BC)

HWRoute0 = i_h * BH * DW + tl.arange(0, BH)[:, None] * DW + i_w * BW + tl.arange(0, BW)[None, :]

# HWRoute1 = i_w * BW * DH + tl.arange(0, BW)[None, :] * DH + i_h * BH + tl.arange(0, BH)[:, None] # trans

HWRoute2 = (NH - i_h - 1) * BH * DW + (BH - 1 - tl.arange(0, BH)[:, None]) * DW + (NW - i_w - 1) * BW + (BW - 1 - tl.arange(0, BW)[None, :]) + (DH - NH * BH) * DW + (DW - NW * BW) # flip

# HWRoute3 = (NW - i_w - 1) * BW * DH + (BW - 1 - tl.arange(0, BW)[None, :]) * DH + (NH - i_h - 1) * BH + (BH - 1 - tl.arange(0, BH)[:, None]) + (DH - NH * BH) + (DW - NW * BW) * DH # trans + flip

if scans == 1:

HWRoute2 = HWRoute0

_tmp1 = DC * DH * DW

y_ptr_base = y + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if y_layout == 0 else i_c * BC)

if y_layout == 0:

p_y1 = y_ptr_base + HWRoute0

# p_y2 = y_ptr_base + _tmp1 + HWRoute1

p_y3 = y_ptr_base + 2 * _tmp1 + HWRoute2

# p_y4 = y_ptr_base + 3 * _tmp1 + HWRoute3

else:

p_y1 = y_ptr_base + HWRoute0 * 4 * DC

# p_y2 = y_ptr_base + DC + HWRoute1 * 4 * DC

p_y3 = y_ptr_base + 2 * DC + HWRoute2 * 4 * DC

# p_y4 = y_ptr_base + 3 * DC + HWRoute3 * 4 * DC

if onebyone == 0:

x_ptr_base = x + i_b * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x = x_ptr_base + HWRoute0

else:

p_x = x_ptr_base + HWRoute0 * DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_x = tl.load(p_x + _idx_x, mask=_mask_hw)

tl.store(p_y1 + _idx_y, _x, mask=_mask_hw)

# tl.store(p_y2 + _idx_y, _x, mask=_mask_hw)

tl.store(p_y3 + _idx_y, _x, mask=_mask_hw)

# tl.store(p_y4 + _idx_y, _x, mask=_mask_hw)

elif operation == 1:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_y1 = tl.load(p_y1 + _idx_y, mask=_mask_hw)

# _y2 = tl.load(p_y2 + _idx_y, mask=_mask_hw)

_y3 = tl.load(p_y3 + _idx_y, mask=_mask_hw)

# _y4 = tl.load(p_y4 + _idx_y, mask=_mask_hw)

# tl.store(p_x + _idx_x, _y1 + _y2 + _y3 + _y4, mask=_mask_hw)

tl.store(p_x + _idx_x, _y1 + _y3, mask=_mask_hw)

else:

x_ptr_base = x + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x1 = x_ptr_base + HWRoute0

p_x2 = p_x1 + _tmp1

p_x3 = p_x2 + _tmp1

p_x4 = p_x3 + _tmp1

else:

p_x1 = x_ptr_base + HWRoute0 * 4 * DC

p_x2 = p_x1 + DC

p_x3 = p_x2 + DC

p_x4 = p_x3 + DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

tl.store(p_y1 + _idx_y, tl.load(p_x1 + _idx_x, mask=_mask_hw), mask=_mask_hw)

# tl.store(p_y2 + _idx_y, tl.load(p_x2 + _idx_x, mask=_mask_hw), mask=_mask_hw)

tl.store(p_y3 + _idx_y, tl.load(p_x3 + _idx_x, mask=_mask_hw), mask=_mask_hw)

# tl.store(p_y4 + _idx_y, tl.load(p_x4 + _idx_x, mask=_mask_hw), mask=_mask_hw)

else:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

tl.store(p_x1 + _idx_x, tl.load(p_y1 + _idx_y), mask=_mask_hw)

# tl.store(p_x2 + _idx_x, tl.load(p_y2 + _idx_y), mask=_mask_hw)

tl.store(p_x3 + _idx_x, tl.load(p_y3 + _idx_y), mask=_mask_hw)

# tl.store(p_x4 + _idx_x, tl.load(p_y4 + _idx_y), mask=_mask_hw)

@triton.jit

def triton_cross_scan_flex_k2(

x, # (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

y, # (B, 4, C, H, W) | (B, H, W, 4, C)

x_layout: tl.constexpr,

y_layout: tl.constexpr,

operation: tl.constexpr,

onebyone: tl.constexpr,

scans: tl.constexpr,

BC: tl.constexpr,

BH: tl.constexpr,

BW: tl.constexpr,

DC: tl.constexpr,

DH: tl.constexpr,

DW: tl.constexpr,

NH: tl.constexpr,

NW: tl.constexpr,

):

i_hw, i_c, i_b = tl.program_id(0), tl.program_id(1), tl.program_id(2)

i_h, i_w = (i_hw // NW), (i_hw % NW)

_mask_h = (i_h * BH + tl.arange(0, BH)) < DH

_mask_w = (i_w * BW + tl.arange(0, BW)) < DW

_mask_hw = _mask_h[:, None] & _mask_w[None, :]

_for_C = min(DC - i_c * BC, BC)

HWRoute0 = i_h * BH * DW + tl.arange(0, BH)[:, None] * DW + i_w * BW + tl.arange(0, BW)[None, :]

HWRoute2 = (NH - i_h - 1) * BH * DW + (BH - 1 - tl.arange(0, BH)[:, None]) * DW + (NW - i_w - 1) * BW + (BW - 1 - tl.arange(0, BW)[None, :]) + (DH - NH * BH) * DW + (DW - NW * BW) # flip

if scans == 1:

HWRoute2 = HWRoute0

_tmp1 = DC * DH * DW

y_ptr_base = y + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if y_layout == 0 else i_c * BC)

if y_layout == 0:

p_y1 = y_ptr_base + HWRoute0

p_y2 = y_ptr_base + 2 * _tmp1 + HWRoute2

else:

p_y1 = y_ptr_base + HWRoute0 * 4 * DC

p_y2 = y_ptr_base + 2 * DC + HWRoute2 * 4 * DC

if onebyone == 0:

x_ptr_base = x + i_b * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x = x_ptr_base + HWRoute0

else:

p_x = x_ptr_base + HWRoute0 * DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_x = tl.load(p_x + _idx_x, mask=_mask_hw)

tl.store(p_y1 + _idx_y, _x, mask=_mask_hw)

tl.store(p_y2 + _idx_y, _x, mask=_mask_hw)

elif operation == 1:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_y1 = tl.load(p_y1 + _idx_y, mask=_mask_hw)

_y2 = tl.load(p_y2 + _idx_y, mask=_mask_hw)

tl.store(p_x + _idx_x, _y1 + _y2, mask=_mask_hw)

else:

x_ptr_base = x + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x1 = x_ptr_base + HWRoute0

p_x2 = p_x1 + 2 * _tmp1

else:

p_x1 = x_ptr_base + HWRoute0 * 4 * DC

p_x2 = p_x1 + 2 * DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

tl.store(p_y1 + _idx_y, tl.load(p_x1 + _idx_x, mask=_mask_hw), mask=_mask_hw)

tl.store(p_y2 + _idx_y, tl.load(p_x2 + _idx_x, mask=_mask_hw), mask=_mask_hw)

else:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

tl.store(p_x1 + _idx_x, tl.load(p_y1 + _idx_y), mask=_mask_hw)

tl.store(p_x2 + _idx_x, tl.load(p_y2 + _idx_y), mask=_mask_hw)

@triton.jit

def triton_cross_scan_flex_k2(

x, # (B, C, H, W) | (B, H, W, C) | (B, 4, C, H, W) | (B, H, W, 4, C)

y, # (B, 4, C, H, W) | (B, H, W, 4, C)

x_layout: tl.constexpr,

y_layout: tl.constexpr,

operation: tl.constexpr,

onebyone: tl.constexpr,

scans: tl.constexpr,

BC: tl.constexpr,

BH: tl.constexpr,

BW: tl.constexpr,

DC: tl.constexpr,

DH: tl.constexpr,

DW: tl.constexpr,

NH: tl.constexpr,

NW: tl.constexpr,

):

i_hw, i_c, i_b = tl.program_id(0), tl.program_id(1), tl.program_id(2)

i_h, i_w = (i_hw // NW), (i_hw % NW)

_mask_h = (i_h * BH + tl.arange(0, BH)) < DH

_mask_w = (i_w * BW + tl.arange(0, BW)) < DW

_mask_hw = _mask_h[:, None] & _mask_w[None, :]

_for_C = min(DC - i_c * BC, BC)

HWRoute0 = i_h * BH * DW + tl.arange(0, BH)[:, None] * DW + i_w * BW + tl.arange(0, BW)[None, :]

HWRoute2 = (NH - i_h - 1) * BH * DW + (BH - 1 - tl.arange(0, BH)[:, None]) * DW + (NW - i_w - 1) * BW + (BW - 1 - tl.arange(0, BW)[None, :]) + (DH - NH * BH) * DW + (DW - NW * BW) # flip

if scans == 1:

HWRoute2 = HWRoute0

_tmp1 = DC * DH * DW

y_ptr_base = y + i_b * 2 * _tmp1 + (i_c * BC * DH * DW if y_layout == 0 else i_c * BC)

if y_layout == 0:

p_y1 = y_ptr_base + HWRoute0

p_y2 = y_ptr_base + 1 * _tmp1 + HWRoute2

else:

p_y1 = y_ptr_base + HWRoute0 * 4 * DC

p_y2 = y_ptr_base + 1 * DC + HWRoute2 * 4 * DC

if onebyone == 0:

x_ptr_base = x + i_b * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x = x_ptr_base + HWRoute0

else:

p_x = x_ptr_base + HWRoute0 * DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_x = tl.load(p_x + _idx_x, mask=_mask_hw)

tl.store(p_y1 + _idx_y, _x, mask=_mask_hw)

tl.store(p_y2 + _idx_y, _x, mask=_mask_hw)

elif operation == 1:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_y1 = tl.load(p_y1 + _idx_y, mask=_mask_hw)

_y2 = tl.load(p_y2 + _idx_y, mask=_mask_hw)

tl.store(p_x + _idx_x, _y1 + _y2, mask=_mask_hw)

else:

x_ptr_base = x + i_b * 4 * _tmp1 + (i_c * BC * DH * DW if x_layout == 0 else i_c * BC)

if x_layout == 0:

p_x1 = x_ptr_base + HWRoute0

p_x2 = p_x1 + 2 * _tmp1

else:

p_x1 = x_ptr_base + HWRoute0 * 4 * DC

p_x2 = p_x1 + 2 * DC

if operation == 0:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_x1 = tl.load(p_x1 + _idx_x, mask=_mask_hw)

_x2 = tl.load(p_x2 + _idx_x, mask=_mask_hw)

tl.store(p_y1 + _idx_y, _x1, mask=_mask_hw)

tl.store(p_y2 + _idx_y, _x2, mask=_mask_hw)

else:

for idxc in range(_for_C):

_idx_x = idxc * DH * DW if x_layout == 0 else idxc

_idx_y = idxc * DH * DW if y_layout == 0 else idxc

_y1 = tl.load(p_y1 + _idx_y, mask=_mask_hw)

_y2 = tl.load(p_y2 + _idx_y, mask=_mask_hw)

tl.store(p_x1 + _idx_x, _y1, mask=_mask_hw)

tl.store(p_x2 + _idx_x, _y2, mask=_mask_hw)

except:

def triton_cross_scan_flex():

pass

class CrossScanTritonFk2(torch.autograd.Function):

@staticmethod

def forward(ctx, x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2):

if one_by_one:

if in_channel_first:

B, _, C, H, W = x.shape

else:

B, H, W, _, C = x.shape

else:

if in_channel_first:

B, C, H, W = x.shape

else:

B, H, W, C = x.shape

B, C, H, W = int(B), int(C), int(H), int(W)

BC, BH, BW = 1, 32, 32

NH, NW, NC = triton.cdiv(H, BH), triton.cdiv(W, BW), triton.cdiv(C, BC)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

ctx.shape = (B, C, H, W)

ctx.triton_shape = (BC, BH, BW, NC, NH, NW)

y = x.new_empty((B, 2, C, H * W)) if out_channel_first else x.new_empty((B, H * W, 2, C))

triton_cross_scan_flex_k2[(NH * NW, NC, B)](

x.contiguous(), y,

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 0, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return y

@staticmethod

def backward(ctx, y: torch.Tensor):

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

BC, BH, BW, NC, NH, NW = ctx.triton_shape

if one_by_one:

x = y.new_empty((B, 2, C, H, W)) if in_channel_first else y.new_empty((B, H, W, 2, C))

else:

x = y.new_empty((B, C, H, W)) if in_channel_first else y.new_empty((B, H, W, C))

triton_cross_scan_flex_k2[(NH * NW, NC, B)](

x, y.contiguous(),

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 1, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return x, None, None, None, None

class CrossMergeTritonFk2(torch.autograd.Function):

@staticmethod

def forward(ctx, y: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2):

if out_channel_first:

B, _, C, H, W = y.shape

else:

B, H, W, _, C = y.shape

B, C, H, W = int(B), int(C), int(H), int(W)

BC, BH, BW = 1, 32, 32

NH, NW, NC = triton.cdiv(H, BH), triton.cdiv(W, BW), triton.cdiv(C, BC)

ctx.in_channel_first = in_channel_first

ctx.out_channel_first = out_channel_first

ctx.one_by_one = one_by_one

ctx.scans = scans

ctx.shape = (B, C, H, W)

ctx.triton_shape = (BC, BH, BW, NC, NH, NW)

if one_by_one:

x = y.new_empty((B, 2, C, H * W)) if in_channel_first else y.new_empty((B, H * W, 2, C))

else:

x = y.new_empty((B, C, H * W)) if in_channel_first else y.new_empty((B, H * W, C))

triton_cross_scan_flex_k2[(NH * NW, NC, B)](

x, y.contiguous(),

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 1, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return x

@staticmethod

def backward(ctx, x: torch.Tensor):

in_channel_first = ctx.in_channel_first

out_channel_first = ctx.out_channel_first

one_by_one = ctx.one_by_one

scans = ctx.scans

B, C, H, W = ctx.shape

BC, BH, BW, NC, NH, NW = ctx.triton_shape

y = x.new_empty((B, 2, C, H, W)) if out_channel_first else x.new_empty((B, H, W, 2, C))

triton_cross_scan_flex_k2[(NH * NW, NC, B)](

x.contiguous(), y,

(0 if in_channel_first else 1), (0 if out_channel_first else 1), 0, (0 if not one_by_one else 1), scans,

BC, BH, BW, C, H, W, NH, NW

)

return y, None, None, None, None, None

# @torch.compile(options={"triton.cudagraphs": True}, fullgraph=True)

def cross_scan_fn_k2(x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2, force_torch=False):

# x: (B, C, H, W) | (B, H, W, C) | (B, 2, C, H, W) | (B, H, W, 2, C)

# y: (B, 2, C, L) | (B, L, 2, C)

# scans: 0: cross scan; 1 unidirectional; 2: bidirectional;

CSF = CrossScanTritonFk2 if WITH_TRITON and x.is_cuda and (not force_torch) else CrossScanF

return CSF.apply(x, in_channel_first, out_channel_first, one_by_one, scans)

# @torch.compile(options={"triton.cudagraphs": True}, fullgraph=True)

def cross_merge_fn_k2(y: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2, force_torch=False):

# y: (B, 2, C, L) | (B, L, 2, C)

# x: (B, C, H * W) | (B, H * W, C) | (B, 2, C, H * W) | (B, H * W, 2, C)

# scans: 0: cross scan; 1 unidirectional; 2: bidirectional;

CMF = CrossMergeTritonFk2 if WITH_TRITON and y.is_cuda and (not force_torch) else CrossMergeF

return CMF.apply(y, in_channel_first, out_channel_first, one_by_one, scans)

def cross_scan_fn_k2_torch(x: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2, force_torch=False):

cross_scan = CrossScan(in_channel_first, out_channel_first, one_by_one, scans)

return cross_scan(x)

def cross_merge_fn_k2_torch(y: torch.Tensor, in_channel_first=True, out_channel_first=True, one_by_one=False, scans=2, force_torch=False):

cross_merge = CrossMerge(in_channel_first, out_channel_first, one_by_one, scans)

return cross_merge(y)

# checks =================================================================

class CHECK:

def check_csm_triton():

B, C, H, W = 2, 192, 56, 57

dtype=torch.float16

dtype=torch.float32

x = torch.randn((B, C, H, W), dtype=dtype, device=torch.device("cuda")).requires_grad_(True)

y = torch.randn((B, 2, C, H, W), dtype=dtype, device=torch.device("cuda")).requires_grad_(True)

x1 = x.clone().detach().requires_grad_(True)

y1 = y.clone().detach().requires_grad_(True)

def cross_scan(x: torch.Tensor):

B, C, H, W = x.shape

L = H * W

xs = torch.stack([

x.view(B, C, L),

torch.transpose(x, dim0=2, dim1=3).contiguous().view(B, C, L),

torch.flip(x.contiguous().view(B, C, L), dims=[-1]),

torch.flip(torch.transpose(x, dim0=2, dim1=3).contiguous().view(B, C, L), dims=[-1]),

], dim=1).view(B, 4, C, L)

return xs

def cross_merge(out_y: torch.Tensor):

B, K, D, H, W = out_y.shape

L = H * W

out_y = out_y.view(B, K, D, L)

inv_y = torch.flip(out_y[:, 2:4], dims=[-1]).view(B, 2, -1, L)

wh_y = torch.transpose(out_y[:, 1].view(B, -1, W, H), dim0=2, dim1=3).contiguous().view(B, -1, L)

invwh_y = torch.transpose(inv_y[:, 1].view(B, -1, W, H), dim0=2, dim1=3).contiguous().view(B, -1, L)

y = out_y[:, 0] + inv_y[:, 0] + wh_y + invwh_y

return y

def cross_scan_1b1(x: torch.Tensor):

B, K, C, H, W = x.shape

L = H * W

xs = torch.stack([

x[:, 0].view(B, C, L),

torch.transpose(x[:, 1], dim0=2, dim1=3).contiguous().view(B, C, L),

torch.flip(x[:, 2].contiguous().view(B, C, L), dims=[-1]),

torch.flip(torch.transpose(x[:, 3], dim0=2, dim1=3).contiguous().view(B, C, L), dims=[-1]),

], dim=1).view(B, 2, C, L)

return xs

def unidi_scan(x):

B, C, H, W = x.shape

x = x.view(B, 1, C, H * W).repeat(1, 4, 1, 1)

return x

def unidi_merge(ys):

B, K, C, H, W = ys.shape

return ys.view(B, 4, -1, H * W).sum(1)

def bidi_scan(x):

B, C, H, W = x.shape

x = x.view(B, 1, C, H * W).repeat(1, 2, 1, 1)

x = torch.cat([x, x.flip(dims=[-1])], dim=1)

return x

def bidi_merge(ys):

B, K, D, H, W = ys.shape

ys = ys.view(B, K, D, -1)

ys = ys[:, 0:2] + ys[:, 2:4].flip(dims=[-1]).view(B, 2, D, -1)

return ys.contiguous().sum(1)

if True:

# res0 = triton.testing.do_bench(lambda :cross_scan(x))

res1 = triton.testing.do_bench(lambda :cross_scan_fn_k2(x, True, True, False))

# res2 = triton.testing.do_bench(lambda :CrossScanTriton.apply(x))

# res3 = triton.testing.do_bench(lambda :cross_merge(y))

res4 = triton.testing.do_bench(lambda :cross_merge_fn_k2(y, True, True, False))

# res5 = triton.testing.do_bench(lambda :CrossMergeTriton.apply(y))

# print(res0, res1, res2, res3, res4, res5)

print(res0, res1, res3, res4)

res0 = triton.testing.do_bench(lambda :cross_scan(x).sum().backward())

res1 = triton.testing.do_bench(lambda :cross_scan_fn_k2(x, True, True, False).sum().backward())

# res2 = triton.testing.do_bench(lambda :CrossScanTriton.apply(x).sum().backward())

res3 = triton.testing.do_bench(lambda :cross_merge(y).sum().backward())

res4 = triton.testing.do_bench(lambda :cross_merge_fn_k2(y, True, True, False).sum().backward())

# res5 = triton.testing.do_bench(lambda :CrossMergeTriton.apply(y).sum().backward())

# print(res0, res1, res2, res3, res4, res5)

print(res0, res1, res3, res4)

print("test cross scan")

for (cs0, cm0, cs1, cm1) in [

# channel_first -> channel_first

(cross_scan, cross_merge, cross_scan_fn_k2, cross_merge_fn_k2),

(unidi_scan, unidi_merge, lambda x: cross_scan_fn_k2(x, scans=1), lambda x: cross_merge_fn_k2(x, scans=1)),

(bidi_scan, bidi_merge, lambda x: cross_scan_fn_k2(x, scans=2), lambda x: cross_merge_fn_k2(x, scans=2)),

# flex: BLC->BCL; BCL->BLC; BLC->BLC;

(cross_scan, cross_merge, lambda x: cross_scan_fn_k2(x.permute(0, 2, 3, 1), in_channel_first=False), lambda x: cross_merge_fn_k2(x, in_channel_first=False).permute(0, 2, 1)),

(cross_scan, cross_merge, lambda x: cross_scan_fn_k2(x, out_channel_first=False).permute(0, 2, 3, 1), lambda x: cross_merge_fn_k2(x.permute(0, 3, 4, 1, 2), out_channel_first=False)),

(cross_scan, cross_merge, lambda x: cross_scan_fn_k2(x.permute(0, 2, 3, 1), in_channel_first=False, out_channel_first=False).permute(0, 2, 3, 1), lambda x: cross_merge_fn_k2(x.permute(0, 3, 4, 1, 2), in_channel_first=False, out_channel_first=False).permute(0, 2, 1)),

# previous

# (cross_scan, cross_merge, lambda x: CrossScanTriton.apply(x), lambda x: CrossMergeTriton.apply(x)),

# (unidi_scan, unidi_merge, lambda x: getCSM(1)[0].apply(x), lambda x: getCSM(1)[1].apply(x)),

# (bidi_scan, bidi_merge, lambda x: getCSM(2)[0].apply(x), lambda x: getCSM(2)[1].apply(x)),

]:

x.grad, x1.grad, y.grad, y1.grad = None, None, None, None

o0 = cs0(x)

o1 = cs1(x1)

o0.backward(y.view(B, 2, C, H * W))

o1.backward(y.view(B, 2, C, H * W))

print((o0 - o1).abs().max())

print((x.grad - x1.grad).abs().max())

o0 = cm0(y)

o1 = cm1(y1)

o0.backward(x.view(B, C, H * W))

o1.backward(x.view(B, C, H * W))

print((o0 - o1).abs().max())

print((y.grad - y1.grad).abs().max())

x.grad, x1.grad, y.grad, y1.grad = None, None, None, None

print("===============", flush=True)

print("test cross scan one by one")

for (cs0, cs1) in [

(cross_scan_1b1, lambda x: cross_scan_fn_k2(x, one_by_one=True)),

# (cross_scan_1b1, lambda x: CrossScanTriton1b1.apply(x)),

]:

o0 = cs0(y)

o1 = cs1(y1)

o0.backward(y.view(B, 2, C, H * W))

o1.backward(y.view(B, 2, C, H * W))

print((o0 - o1).abs().max())

print((y.grad - y1.grad).abs().max())

x.grad, x1.grad, y.grad, y1.grad = None, None, None, None

print("===============", flush=True)

if __name__ == "__main__":

CHECK.check_csm_triton()

csms6s.py

import time

import torch

import warnings

WITH_SELECTIVESCAN_OFLEX = True

WITH_SELECTIVESCAN_CORE = False

WITH_SELECTIVESCAN_MAMBA = True

try:

import selective_scan_cuda_oflex

except ImportError:

WITH_SELECTIVESCAN_OFLEX = False

# warnings.warn("Can not import selective_scan_cuda_oflex. This affects speed.")

# print("Can not import selective_scan_cuda_oflex. This affects speed.", flush=True)

try:

import selective_scan_cuda_core

except ImportError:

WITH_SELECTIVESCAN_CORE = False

try:

import selective_scan_cuda

except ImportError:

WITH_SELECTIVESCAN_MAMBA = False

def selective_scan_torch(

u: torch.Tensor, # (B, K * C, L)

delta: torch.Tensor, # (B, K * C, L)

A: torch.Tensor, # (K * C, N)

B: torch.Tensor, # (B, K, N, L)

C: torch.Tensor, # (B, K, N, L)

D: torch.Tensor = None, # (K * C)

delta_bias: torch.Tensor = None, # (K * C)

delta_softplus=True,

oflex=True,

*args,

**kwargs

):

dtype_in = u.dtype

Batch, K, N, L = B.shape

KCdim = u.shape[1]

Cdim = int(KCdim / K)

assert u.shape == (Batch, KCdim, L)

assert delta.shape == (Batch, KCdim, L)

assert A.shape == (KCdim, N)

assert C.shape == B.shape

if delta_bias is not None:

delta = delta + delta_bias[..., None]

if delta_softplus:

delta = torch.nn.functional.softplus(delta)

u, delta, A, B, C = u.float(), delta.float(), A.float(), B.float(), C.float()

B = B.view(Batch, K, 1, N, L).repeat(1, 1, Cdim, 1, 1).view(Batch, KCdim, N, L)

C = C.view(Batch, K, 1, N, L).repeat(1, 1, Cdim, 1, 1).view(Batch, KCdim, N, L)

deltaA = torch.exp(torch.einsum('bdl,dn->bdln', delta, A))

deltaB_u = torch.einsum('bdl,bdnl,bdl->bdln', delta, B, u)

if True:

x = A.new_zeros((Batch, KCdim, N))

ys = []

for i in range(L):

x = deltaA[:, :, i, :] * x + deltaB_u[:, :, i, :]

y = torch.einsum('bdn,bdn->bd', x, C[:, :, :, i])

ys.append(y)

y = torch.stack(ys, dim=2) # (B, C, L)

out = y if D is None else y + u * D.unsqueeze(-1)

return out if oflex else out.to(dtype=dtype_in)

class SelectiveScanCuda(torch.autograd.Function):

@staticmethod

@torch.cuda.amp.custom_fwd

def forward(ctx, u, delta, A, B, C, D=None, delta_bias=None, delta_softplus=False, oflex=True, backend=None):

ctx.delta_softplus = delta_softplus

backend = "oflex" if WITH_SELECTIVESCAN_OFLEX and (backend is None) else backend

backend = "core" if WITH_SELECTIVESCAN_CORE and (backend is None) else backend

backend = "mamba" if WITH_SELECTIVESCAN_MAMBA and (backend is None) else backend

ctx.backend = backend

if backend == "oflex":

out, x, *rest = selective_scan_cuda_oflex.fwd(u, delta, A, B, C, D, delta_bias, delta_softplus, 1, oflex)

elif backend == "core":

out, x, *rest = selective_scan_cuda_core.fwd(u, delta, A, B, C, D, delta_bias, delta_softplus, 1)

elif backend == "mamba":

out, x, *rest = selective_scan_cuda.fwd(u, delta, A, B, C, D, None, delta_bias, delta_softplus)

ctx.save_for_backward(u, delta, A, B, C, D, delta_bias, x)

return out

@staticmethod

@torch.cuda.amp.custom_bwd

def backward(ctx, dout, *args):

u, delta, A, B, C, D, delta_bias, x = ctx.saved_tensors

backend = ctx.backend

if dout.stride(-1) != 1:

dout = dout.contiguous()

if backend == "oflex":

du, ddelta, dA, dB, dC, dD, ddelta_bias, *rest = selective_scan_cuda_oflex.bwd(

u, delta, A, B, C, D, delta_bias, dout, x, ctx.delta_softplus, 1

)

elif backend == "core":

du, ddelta, dA, dB, dC, dD, ddelta_bias, *rest = selective_scan_cuda_core.bwd(

u, delta, A, B, C, D, delta_bias, dout, x, ctx.delta_softplus, 1

)

elif backend == "mamba":

du, ddelta, dA, dB, dC, dD, ddelta_bias, *rest = selective_scan_cuda.bwd(

u, delta, A, B, C, D, None, delta_bias, dout, x, None, None, ctx.delta_softplus,

False

)

return du, ddelta, dA, dB, dC, dD, ddelta_bias, None, None, None

def selective_scan_fn(

u: torch.Tensor, # (B, K * C, L)

delta: torch.Tensor, # (B, K * C, L)

A: torch.Tensor, # (K * C, N)

B: torch.Tensor, # (B, K, N, L)

C: torch.Tensor, # (B, K, N, L)

D: torch.Tensor = None, # (K * C)

delta_bias: torch.Tensor = None, # (K * C)

delta_softplus=True,

oflex=True,

backend=None,

):

WITH_CUDA = (WITH_SELECTIVESCAN_OFLEX or WITH_SELECTIVESCAN_CORE or WITH_SELECTIVESCAN_MAMBA)

fn = selective_scan_torch if backend == "torch" or (not WITH_CUDA) else SelectiveScanCuda.apply

return fn(u, delta, A, B, C, D, delta_bias, delta_softplus, oflex, backend)

# fvcore flops =======================================

def print_jit_input_names(inputs):

print("input params: ", end=" ", flush=True)

try:

for i in range(10):

print(inputs[i].debugName(), end=" ", flush=True)

except Exception as e:

pass

print("", flush=True)

def flops_selective_scan_fn(B=1, L=256, D=768, N=16, with_D=True, with_Z=False, with_complex=False):

"""

u: r(B D L)

delta: r(B D L)

A: r(D N)

B: r(B N L)

C: r(B N L)

D: r(D)

z: r(B D L)

delta_bias: r(D), fp32

ignores:

[.float(), +, .softplus, .shape, new_zeros, repeat, stack, to(dtype), silu]

"""

assert not with_complex

# https://github.com/state-spaces/mamba/issues/110

flops = 9 * B * L * D * N

if with_D:

flops += B * D * L

if with_Z:

flops += B * D * L

return flops

# this is only for selective_scan_ref...

def flops_selective_scan_ref(B=1, L=256, D=768, N=16, with_D=True, with_Z=False, with_Group=True, with_complex=False):

"""

u: r(B D L)

delta: r(B D L)

A: r(D N)

B: r(B N L)

C: r(B N L)

D: r(D)

z: r(B D L)

delta_bias: r(D), fp32

ignores:

[.float(), +, .softplus, .shape, new_zeros, repeat, stack, to(dtype), silu]

"""

import numpy as np

# fvcore.nn.jit_handles

def get_flops_einsum(input_shapes, equation):

np_arrs = [np.zeros(s) for s in input_shapes]

optim = np.einsum_path(equation, *np_arrs, optimize="optimal")[1]

for line in optim.split("\n"):

if "optimized flop" in line.lower():

# divided by 2 because we count MAC (multiply-add counted as one flop)

flop = float(np.floor(float(line.split(":")[-1]) / 2))

return flop

assert not with_complex

flops = 0 # below code flops = 0

flops += get_flops_einsum([[B, D, L], [D, N]], "bdl,dn->bdln")

if with_Group:

flops += get_flops_einsum([[B, D, L], [B, N, L], [B, D, L]], "bdl,bnl,bdl->bdln")

else:

flops += get_flops_einsum([[B, D, L], [B, D, N, L], [B, D, L]], "bdl,bdnl,bdl->bdln")

in_for_flops = B * D * N

if with_Group:

in_for_flops += get_flops_einsum([[B, D, N], [B, D, N]], "bdn,bdn->bd")

else:

in_for_flops += get_flops_einsum([[B, D, N], [B, N]], "bdn,bn->bd")

flops += L * in_for_flops

if with_D:

flops += B * D * L

if with_Z:

flops += B * D * L

return flops

def selective_scan_flop_jit(inputs, outputs, backend="prefixsum", verbose=True):

if verbose:

print_jit_input_names(inputs)

flops_fn = flops_selective_scan_ref if backend == "naive" else flops_selective_scan_fn

B, D, L = inputs[0].type().sizes()

N = inputs[2].type().sizes()[1]

flops = flops_fn(B=B, L=L, D=D, N=N, with_D=True, with_Z=False)

return flops

if __name__ == "__main__":

def params(B, K, C, N, L, device = torch.device("cuda"), itype = torch.float):

As = (-0.5 * torch.rand(K * C, N, device=device, dtype=torch.float32)).requires_grad_()

Bs = torch.randn((B, K, N, L), device=device, dtype=itype).requires_grad_()

Cs = torch.randn((B, K, N, L), device=device, dtype=itype).requires_grad_()

Ds = torch.randn((K * C), device=device, dtype=torch.float32).requires_grad_()

u = torch.randn((B, K * C, L), device=device, dtype=itype).requires_grad_()

delta = (0.5 * torch.rand((B, K * C, L), device=device, dtype=itype)).requires_grad_()

delta_bias = (0.5 * torch.rand((K * C), device=device, dtype=torch.float32)).requires_grad_()

return u, delta, As, Bs, Cs, Ds, delta_bias

def bench(func, xs, Warmup=30, NTimes=20):

import time

torch.cuda.synchronize()

for r in range(Warmup):

for x in xs:

func(x)

torch.cuda.synchronize()

tim0 = time.time()

for r in range(NTimes):

for x in xs:

func(x)

torch.cuda.synchronize()

return (time.time() - tim0) / NTimes

def check():

u, delta, As, Bs, Cs, Ds, delta_bias = params(1, 4, 16, 8, 512, itype=torch.float16)

u1, delta1, As1, Bs1, Cs1, Ds1, delta_bias1 = [x.clone().detach().requires_grad_() for x in [u, delta, As, Bs, Cs, Ds, delta_bias]]

# out_ref = selective_scan_fn(u, delta, As, Bs, Cs, Ds, delta_bias, True, backend="torch")

out = selective_scan_fn(u1, delta1, As1, Bs1, Cs1, Ds1, delta_bias1, True, backend="oflex")

out_ref = selective_scan_fn(u, delta, As, Bs, Cs, Ds, delta_bias, True, backend="mamba")

print((out_ref - out).abs().max())

out.sum().backward()

out_ref.sum().backward()

for x, y in zip([u, As, Bs, Cs, Ds, delta, delta_bias], [u1, As1, Bs1, Cs1, Ds1, delta1, delta_bias1]):

print((x.grad - y.grad).abs().max())

u, delta, As, Bs, Cs, Ds, delta_bias = params(128, 4, 96, 8, 56 * 56)

print(bench(lambda x: selective_scan_fn(x[0], x[1], x[2], x[3], x[4], x[5], x[6], True, backend="oflex"), [(u, delta, As, Bs, Cs, Ds, delta_bias),]))

print(bench(lambda x: selective_scan_fn(x[0], x[1], x[2], x[3], x[4], x[5], x[6], True, backend="mamba"), [(u, delta, As, Bs, Cs, Ds, delta_bias),]))

print(bench(lambda x: selective_scan_fn(x[0], x[1], x[2], x[3], x[4], x[5], x[6], True, backend="torch"), [(u, delta, As, Bs, Cs, Ds, delta_bias),]))

check()

vmambanew.py

import os

import time

import math

import copy

from functools import partial

from typing import Optional, Callable, Any

from collections import OrderedDict

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.checkpoint as checkpoint

from timm.models.layers import DropPath, trunc_normal_

DropPath.__repr__ = lambda self: f"timm.DropPath({self.drop_prob})"

# train speed is slower after enabling this opts.

# torch.backends.cudnn.enabled = True

# torch.backends.cudnn.benchmark = True

# torch.backends.cudnn.deterministic = True

try:

from .csm_triton import cross_scan_fn, cross_merge_fn

except:

from csm_triton import cross_scan_fn, cross_merge_fn

try:

from .csm_tritonk2 import cross_scan_fn_k2, cross_merge_fn_k2

from .csm_tritonk2 import cross_scan_fn_k2_torch, cross_merge_fn_k2_torch

except:

from csm_tritonk2 import cross_scan_fn_k2, cross_merge_fn_k2

from csm_tritonk2 import cross_scan_fn_k2_torch, cross_merge_fn_k2_torch

try:

from .csms6s import selective_scan_fn, selective_scan_flop_jit

except:

from csms6s import selective_scan_fn, selective_scan_flop_jit

# FLOPs counter not prepared fro mamba2

# try:

# from .mamba2.ssd_minimal import selective_scan_chunk_fn

# except:

# from mamba2.ssd_minimal import selective_scan_chunk_fn

# =====================================================

# we have this class as linear and conv init differ from each other

# this function enable loading from both conv2d or linear

class Linear2d(nn.Linear):

def forward(self, x: torch.Tensor):

# B, C, H, W = x.shape

return F.conv2d(x, self.weight[:, :, None, None], self.bias)

def _load_from_state_dict(self, state_dict, prefix, local_metadata, strict, missing_keys, unexpected_keys,

error_msgs):

state_dict[prefix + "weight"] = state_dict[prefix + "weight"].view(self.weight.shape)

return super()._load_from_state_dict(state_dict, prefix, local_metadata, strict, missing_keys, unexpected_keys,

error_msgs)

class LayerNorm2d(nn.LayerNorm):

def forward(self, x: torch.Tensor):

x = x.permute(0, 2, 3, 1)

x = nn.functional.layer_norm(x, self.normalized_shape, self.weight, self.bias, self.eps)

x = x.permute(0, 3, 1, 2)

return x

class PatchMerging2D(nn.Module):

def __init__(self, dim, out_dim=-1, norm_layer=nn.LayerNorm, channel_first=False):

super().__init__()

self.dim = dim

Linear = Linear2d if channel_first else nn.Linear

self._patch_merging_pad = self._patch_merging_pad_channel_first if channel_first else self._patch_merging_pad_channel_last

self.reduction = Linear(4 * dim, (2 * dim) if out_dim < 0 else out_dim, bias=False)

self.norm = norm_layer(4 * dim)

@staticmethod

def _patch_merging_pad_channel_last(x: torch.Tensor):

H, W, _ = x.shape[-3:]

if (W % 2 != 0) or (H % 2 != 0):

x = F.pad(x, (0, 0, 0, W % 2, 0, H % 2))

x0 = x[..., 0::2, 0::2, :] # ... H/2 W/2 C

x1 = x[..., 1::2, 0::2, :] # ... H/2 W/2 C

x2 = x[..., 0::2, 1::2, :] # ... H/2 W/2 C

x3 = x[..., 1::2, 1::2, :] # ... H/2 W/2 C

x = torch.cat([x0, x1, x2, x3], -1) # ... H/2 W/2 4*C

return x

@staticmethod

def _patch_merging_pad_channel_first(x: torch.Tensor):

H, W = x.shape[-2:]

if (W % 2 != 0) or (H % 2 != 0):

x = F.pad(x, (0, 0, 0, W % 2, 0, H % 2))

x0 = x[..., 0::2, 0::2] # ... H/2 W/2

x1 = x[..., 1::2, 0::2] # ... H/2 W/2

x2 = x[..., 0::2, 1::2] # ... H/2 W/2

x3 = x[..., 1::2, 1::2] # ... H/2 W/2

x = torch.cat([x0, x1, x2, x3], 1) # ... H/2 W/2 4*C

return x

def forward(self, x):

x = self._patch_merging_pad(x)

x = self.norm(x)

x = self.reduction(x)

return x

class Permute(nn.Module):

def __init__(self, *args):

super().__init__()

self.args = args

def forward(self, x: torch.Tensor):

return x.permute(*self.args)

class Mlp(nn.Module):

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.,

channels_first=False):

super().__init__()

out_features = out_features or in_features

hidden_features = hidden_features or in_features

Linear = Linear2d if channels_first else nn.Linear

self.fc1 = Linear(in_features, hidden_features)

self.act = act_layer()

self.fc2 = Linear(hidden_features, out_features)

self.drop = nn.Dropout(drop)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.drop(x)

x = self.fc2(x)

x = self.drop(x)

return x

class gMlp(nn.Module):

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.,

channels_first=False):

super().__init__()

self.channel_first = channels_first

out_features = out_features or in_features

hidden_features = hidden_features or in_features

Linear = Linear2d if channels_first else nn.Linear

self.fc1 = Linear(in_features, 2 * hidden_features)

self.act = act_layer()

self.fc2 = Linear(hidden_features, out_features)

self.drop = nn.Dropout(drop)

def forward(self, x: torch.Tensor):

x = self.fc1(x)

x, z = x.chunk(2, dim=(1 if self.channel_first else -1))

x = self.fc2(x * self.act(z))

x = self.drop(x)

return x

class SoftmaxSpatial(nn.Softmax):

def forward(self, x: torch.Tensor):

if self.dim == -1:

B, C, H, W = x.shape